Why we're not building a chatbot

Chat interfaces feel modern but produce ephemeral, indefensible output. Professional tax research needs structured answers you can file, reproduce, and defend.

By Auryth Team

Your client calls. They want to know how you arrived at the withholding tax position you recommended six weeks ago. You open your AI tool — and scroll through a chat history that reads like a stream-of-consciousness monologue. Somewhere between a VAT question and a corporate reorganization thread, the relevant exchange sits buried. Maybe. If the session wasn’t deleted.

This is not an edge case. This is the default experience of every professional using a chatbot for serious research.

The chatbot paradigm has a structural problem

The entire legal AI industry converged on chat. ChatGPT trained hundreds of millions of users to expect a text box and a conversation. Every competitor followed. The result: professional-grade tax research crammed into an interface designed for casual conversation.

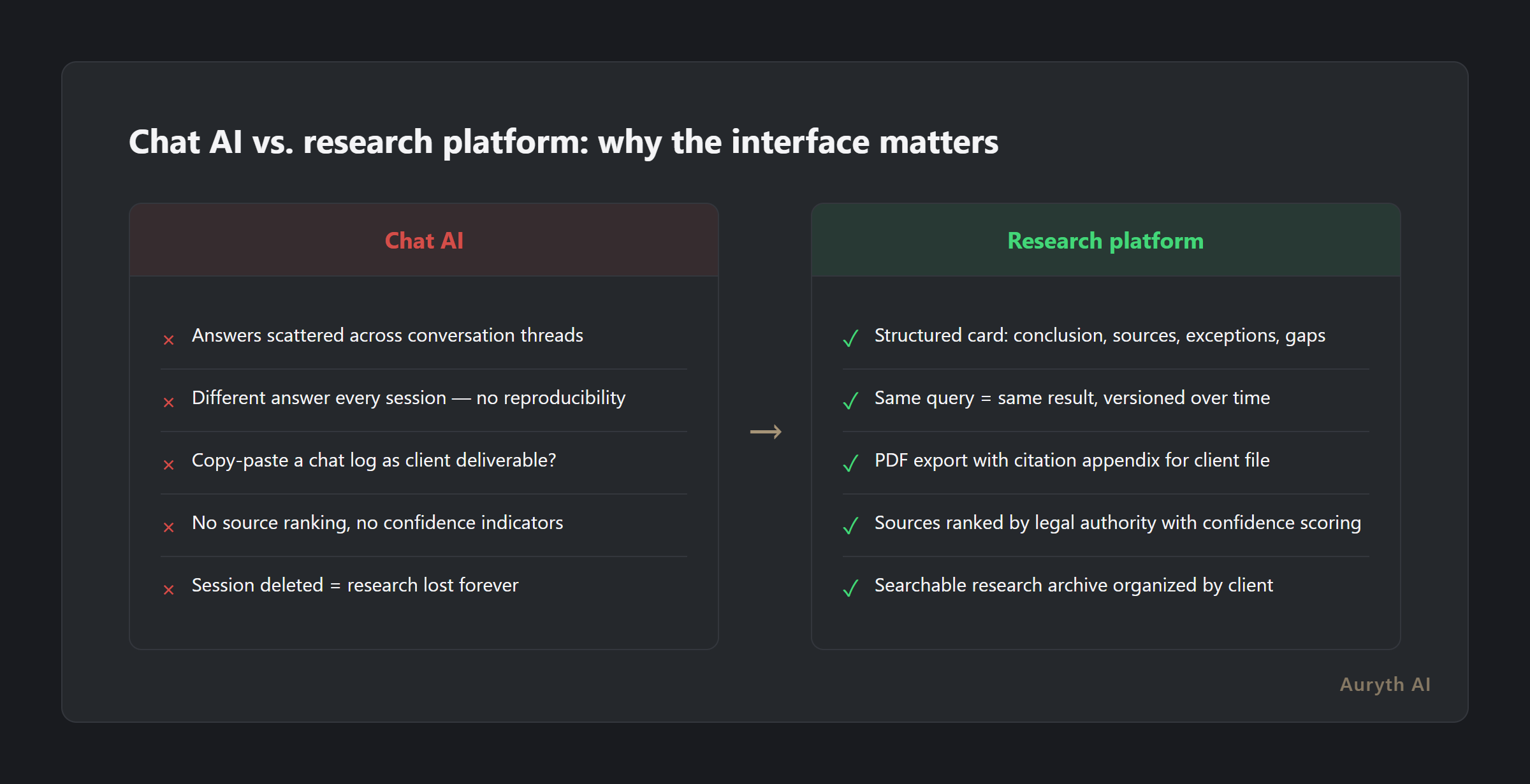

The problem is not accuracy — though that matters too. The problem is ephemerality. Chat conversations are:

- Unstructured. The answer is scattered across multiple exchanges, mixed with your follow-up questions and the AI’s hedging

- Unreproducible. Ask the same question tomorrow and get a different answer — with no way to compare

- Unexportable. Try turning a chat transcript into a client memo. You will rewrite everything from scratch

- Indefensible. When a colleague, regulator, or client asks “how did you reach this conclusion?” — a chat log is not an answer

Since mid-2023, hundreds of cases of AI-generated legal hallucinations have been documented globally, with more than 50 in July 2025 alone involving fabricated citations. Most of these involved professionals who treated chat output as research. It wasn’t.

The accountability test

Before relying on any AI tool for professional work, ask three questions:

| Question | Chat AI | Research platform |

|---|---|---|

| Can I reproduce this result next week? | No — different session, different answer | Yes — same query, same structured output |

| Can I export this as a client deliverable? | Copy-paste a conversation transcript? | Structured output with citations, ready to file |

| Can I defend this if challenged? | ”The AI told me in a chat” | Documented research with source chain and confidence indicators |

If the answer to any of these is no, you are not doing research. You are having a conversation.

If you can’t reproduce it, you can’t defend it.

Why chat works — and where it stops

To be fair: chat interfaces are not useless. They work well for low-stakes tasks where accountability does not matter. Client intake on a website, brainstorming session notes, drafting an initial email — chat is fine.

The failure happens when the stakes rise. When a Belgian tax advisor relies on an AI answer for a ruling request to the DVB (Dienst Voorafgaande Beslissingen), that answer needs to be traceable to specific legal sources. When an accountant prepares a position for an ITAA peer review, the research must be reproducible. When a notary structures an estate plan, the analysis must be exportable as a formal memo.

Chat cannot do any of this. Not because the underlying model is bad, but because the interface is structurally incapable of producing defensible work product.

The Belgian regulatory reality

The Orde van Vlaamse Balies (OVB) published detailed AI guidelines requiring professionals to document their AI tool usage, maintain source traceability, and demonstrate that their AI-assisted work is legally and ethically sound. The Belgian Data Protection Authority followed in September 2024 with comprehensive guidance on AI systems and GDPR compliance.

The EU AI Act, fully applicable from August 2026, requires automatic structured logging for high-risk AI systems under Article 12. A chat transcript does not qualify as structured logging.

Meanwhile, insurers are responding to AI risk with near-absolute exclusions. Professional liability policies increasingly exclude coverage for claims “in any way related to AI” — meaning if your AI-assisted advice goes wrong and your documentation is a chat history, your insurer may decline the claim entirely.

The regulatory direction is clear: auditability is not optional. Every tool used in professional practice must produce output that can be reviewed, reproduced, and defended. Chat architectures were never designed for this.

What we build instead: dossier-proof research

Dossier-proof AI — dossiervaste AI — means every answer the system produces can be filed, exported, and defended as professional work product. Not a conversation. A structured research deliverable.

The difference is architectural, not cosmetic:

| Aspect | Chat paradigm | Dossier-proof research |

|---|---|---|

| Output format | Free-text conversation | Structured card: conclusion, sources, exceptions, confidence, gaps |

| Source chain | Inline mentions, if any | Every claim linked to a specific provision, ranked by legal authority |

| Reproducibility | New session = new answer | Same query produces same structured result, versioned over time |

| Export | Copy-paste a chat log | PDF with citation appendix, ready for client file |

| Organization | Flat chat history | Client folders, searchable research archive |

| Confidence | The AI sounds equally certain about everything | Explicit confidence indicators — the system tells you when it is uncertain |

This is not a feature preference. It is the difference between a tool that helps you brainstorm and a tool that helps you practice.

The industry already knows chat is not enough

Legal tech’s defining shift in 2025 was the move from chatbot-style AI to agentic, embedded systems. Thomson Reuters, LexisNexis, and Harvey all added agentic and workflow-embedded features alongside their chat interfaces at ILTACON 2025. Industry analysts predict that AI systems will increasingly provide structured interfaces rather than empty text boxes.

The question is not whether the industry moves beyond chat. It is whether your firm moves before a professional liability incident forces the issue.

A significant share of professional service time is wasted searching for answers scattered across unstructured knowledge systems. Chat histories make this worse, not better. Every unanswered question about “what did the AI say about that case three months ago?” represents institutional knowledge that was generated and immediately lost.

Related articles

- What is authority ranking — and why your legal AI tool probably ignores it →

- What is confidence scoring — and why it’s more honest than a confident answer →

- How to evaluate a legal AI tool: 10 questions that actually matter →

How Auryth TX applies this

Every answer Auryth TX produces follows the same structure: a clear conclusion, a ranked source chain weighted by legal authority, an exception map flagging cross-domain risks, confidence indicators showing where the system is certain and where it is not, and explicit gap detection identifying what the system could not find.

The output is designed for your client file, not your chat history. Export as PDF with a full citation appendix. Organize research by client, by matter, by question. Return to a query months later and get the same structured result — updated if the underlying law has changed.

We did not build a chatbot with better footnotes. We built a research platform where every answer is defensible by design.

Nooit meer adviseren op basis van verouderd onderzoek — wij waarschuwen u automatisch wanneer bronnen wijzigen.

Sources: 1. Charlotin, D. (2025). “AI Hallucination Cases Database.” HEC Paris. 2. Dahl, M. et al. (2024). “Large Legal Fictions: Profiling Legal Hallucinations in Large Language Models.” Journal of Legal Analysis, 16(1), 64-93. 3. Orde van Vlaamse Balies (2024). “AI-richtlijnen voor advocaten.” 4. Belgian Data Protection Authority (2024). “Informative Brochure: AI Systems and GDPR.” September 2024. 5. EU Regulation 2024/1689 (AI Act), Article 12: Automatic logging requirements for high-risk AI systems.