Why we publish our accuracy — and why almost nobody else does

Accuracy claims without published metrics are marketing. Here's what it takes to measure legal AI honestly, and why the industry avoids it.

By Auryth Team

Every legal AI vendor claims high accuracy. Ask them for the data, and the conversation gets quiet.

This isn’t an oversight. It’s a strategy. Accuracy claims that can’t be verified aren’t claims — they’re slogans. And the legal AI industry has built an entire sales cycle on slogans that no buyer can audit.

We think that should change. Not because we’re braver than anyone else, but because professionals who stake their reputation on AI-assisted research deserve to see the report card before they trust the tutor.

The accuracy illusion

The gap between what legal AI vendors promise and what they can prove is wider than most buyers realise.

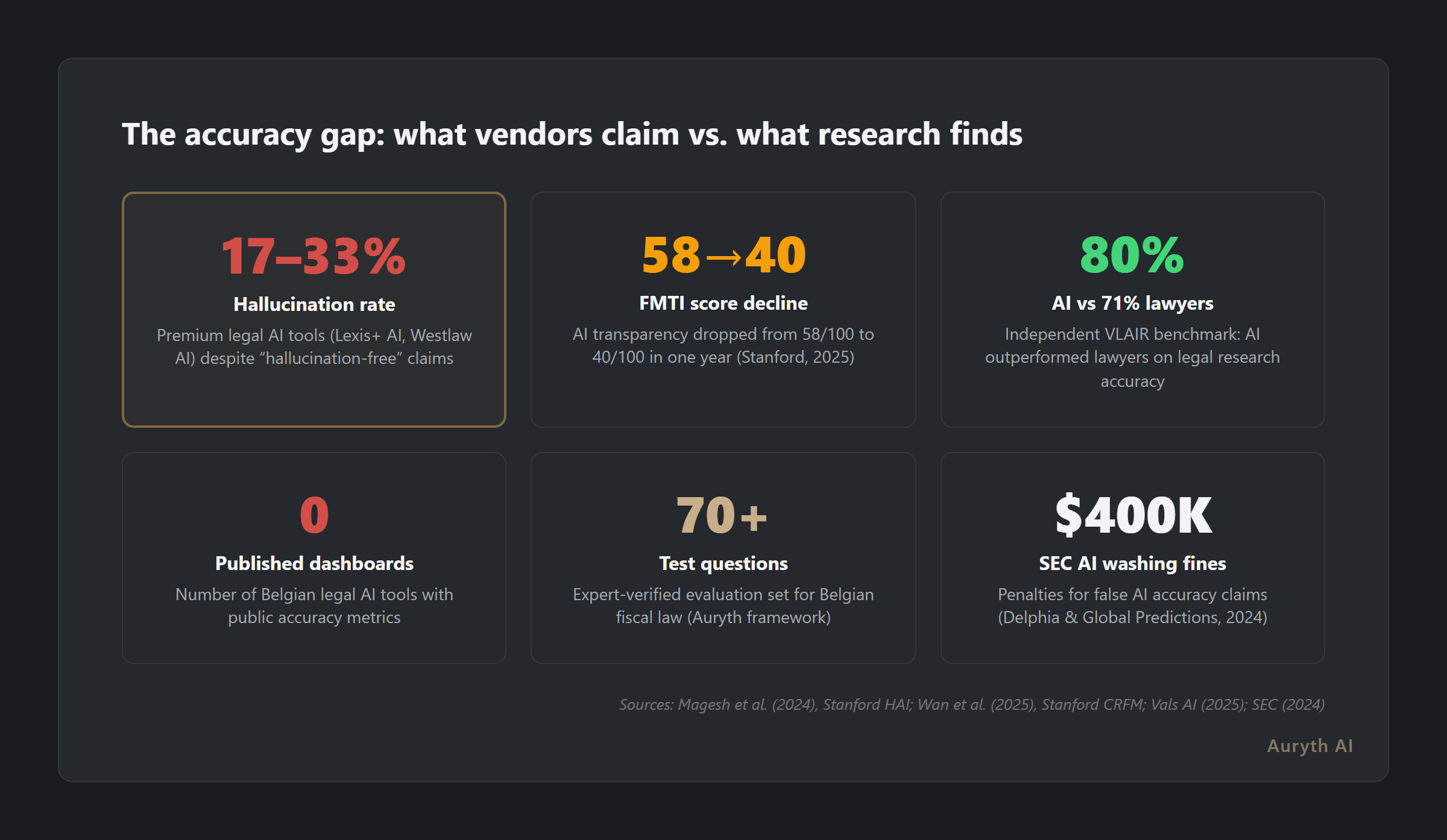

Stanford researchers tested the leading RAG-based legal research tools — Lexis+ AI, Westlaw AI-Assisted Research, and Ask Practical Law AI — in the first preregistered empirical evaluation of its kind. Despite marketing claims of “hallucination-free” results, every tool hallucinated between 17% and 33% of the time. Lexis+ AI was the highest-performing system, accurately answering 65% of queries. Westlaw’s AI-assisted research was accurate 42% of the time but hallucinated nearly twice as often as the other tools tested (Magesh et al., 2024).

These aren’t obscure research prototypes. These are the tools that the largest law firms in the world pay premium subscriptions for. And until Stanford tested them independently, no buyer had any way to verify the marketing.

Accuracy without methodology is just a number. Methodology without publication is just a promise.

Why the industry avoids measurement

Publishing accuracy metrics is expensive, uncomfortable, and competitively risky. Here’s why most vendors choose silence instead:

| Barrier | Why it stops measurement |

|---|---|

| No standard benchmark | Legal AI has no equivalent of ImageNet or GLUE. Every vendor defines “accuracy” differently — some count citation existence, others count substantive correctness, others count user satisfaction |

| Measurement is domain-specific | A benchmark that works for US case law is meaningless for Belgian fiscal law. Building a valid test set requires domain experts, not just engineers |

| Results expose weaknesses | A published 87% accuracy rate invites the question: “What about the other 13%?” Vendors prefer vague claims over specific numbers that can be scrutinised |

| Temporal decay | Legal accuracy isn’t static. A system that scored 92% in January may score 84% in June after a programme law amends dozens of provisions. Continuous measurement requires continuous investment |

| Competitive risk | If you publish and your competitor doesn’t, buyers compare your honest 88% against their implied “near-perfect.” The honest vendor looks worse |

This creates a race to the bottom of transparency. The rational vendor strategy is: claim accuracy loudly, measure it never.

The transparency recession

The problem extends well beyond legal AI. Stanford’s Foundation Model Transparency Index — the most comprehensive assessment of AI company disclosure — found that transparency across the industry declined from an average score of 58 out of 100 in 2024 to just 40 in 2025, reversing the prior year’s progress entirely (Wan et al., 2025).

Meta’s score dropped from 60 to 31. Mistral’s fell from 55 to 18. The companies building the foundation models that legal AI tools depend on are becoming less transparent, not more.

For legal professionals, this matters directly. When the foundation models become more opaque, the tools built on top of them inherit that opacity. You’re not just trusting your legal AI vendor’s accuracy claims — you’re trusting a chain of claims that nobody in the chain can fully verify.

What honest measurement looks like

Publishing accuracy isn’t just putting a percentage on a webpage. It requires infrastructure that most vendors haven’t built:

1. A golden dataset built by domain experts

Not generated by AI. Not scraped from existing benchmarks. Hand-crafted questions with verified answers, covering the full complexity of the target domain. For Belgian tax law, that means temporal questions (what was the law in 2019?), regional comparisons (how does Flanders differ from Brussels?), cross-domain analysis (what are all tax consequences of a TAK 23 product?), and edge cases where the law is genuinely ambiguous.

2. Continuous evaluation, not one-time testing

A single benchmark run is a snapshot, not a system. Laws change. Models are updated. Corpus content evolves. Honest measurement means running evaluations continuously and publishing the trend, not the peak.

3. Multi-dimensional scoring

“Accuracy” is not one number. It’s at least three:

| Dimension | What it measures |

|---|---|

| Citation accuracy | Do the cited sources exist? Do they say what the system claims? |

| Substantive correctness | Is the legal conclusion right, given the cited sources? |

| Completeness | Did the system find all relevant provisions, or just the obvious ones? |

A system that scores 95% on citation accuracy but 60% on completeness is dangerous — it gives you correct but incomplete advice with high confidence.

4. Published methodology

The methodology matters as much as the score. How were questions selected? Who verified the answers? What counts as “correct”? Without published methodology, a score is unfalsifiable — and unfalsifiable claims are worth exactly nothing.

The Vals benchmark and what it reveals

The Vals Legal AI Report (VLAIR) — the first independent benchmark of legal AI tools — offers a useful precedent. In its February 2025 evaluation, Harvey Assistant achieved 94.8% accuracy on document Q&A, outperforming the lawyer control group on four of seven tasks. In the October 2025 legal research evaluation, all tested AI tools scored around 80% accuracy versus 71% for lawyers (Vals AI, 2025).

But the VLAIR also revealed a critical gap: while general-purpose AI matched specialised legal AI on raw accuracy, it lagged significantly on authoritativeness — scoring 70% versus the legal AI average of 76%. Access to curated legal databases and structured citation sources still matters.

The bigger story, though, is who participated. Harvey participated. Alexi participated. Counsel Stack participated. Several major players — including Thomson Reuters and LexisNexis — did not. When the industry’s largest vendors opt out of independent evaluation, the gap between marketing and measurement stays wide.

Common questions

Why don’t more legal AI companies publish their accuracy metrics?

Publishing accuracy requires building evaluation infrastructure, accepting public scrutiny, and investing in continuous measurement. Most vendors calculate that vague claims carry less commercial risk than specific numbers — because specific numbers can be challenged. This calculus changes when buyers start demanding evidence.

What is a good accuracy rate for legal AI?

There is no universal answer because “accuracy” encompasses citation existence, substantive correctness, and completeness. A system that gets the right answer but misses relevant exceptions is technically “accurate” on the question asked but professionally dangerous. Multi-dimensional measurement — covering correctness, completeness, and temporal validity — matters more than any single number.

How can I verify a vendor’s accuracy claims myself?

Ask three questions: (1) What benchmark methodology do you use? (2) Who built and verified the test set? (3) Can I see the historical trend, not just the current score? If the vendor can’t answer all three, the claim is marketing.

Why we chose to publish

We publish our accuracy metrics for a simple reason: we think you should be able to verify the claims of any tool you stake your professional reputation on.

Our evaluation framework covers 70+ expert-verified questions across Belgian fiscal law — temporal questions, regional comparisons, cross-domain analysis, authority hierarchy awareness, and deliberate edge cases where the law is ambiguous or contradictory. We run these continuously, not once. We publish the trend, not the peak.

When our accuracy dips — and it will, because programme laws amend dozens of provisions at once — that dip will be visible. We consider that a feature, not a bug. A public drop in accuracy after a major legislative change proves the measurement is real. A score that never changes proves only that nobody is checking.

Every inaccuracy report we receive becomes a new test case. The system gets measurably better every week. That’s the point: transparency isn’t a marketing gesture. It’s an improvement engine.

The SEC has fined companies for “AI washing” — making false or misleading claims about AI capabilities. In 2024, Delphia and Global Predictions paid $400,000 in penalties for overstating their AI’s role. In 2025, the enforcement expanded to public companies (SEC, 2024). The regulatory direction is clear: unverifiable AI claims will increasingly carry legal consequences.

We’d rather show you an honest score and explain the gaps than claim perfection and hope you don’t check.

Related articles

- Why transparency matters more than accuracy in legal AI

- What is confidence scoring — and why it’s more honest than a confident answer

- What the Stanford hallucination study actually revealed

- How to evaluate a legal AI tool: 10 questions that actually matter

How Auryth TX applies this

Auryth TX publishes a live accuracy dashboard covering citation accuracy, substantive correctness, and cross-domain completeness across Belgian fiscal law. The evaluation set is built and verified by tax practitioners — not generated by AI or borrowed from generic benchmarks.

Every metric is continuously updated. When a programme law amends the legal corpus, our scores reflect the disruption in real time. When a user reports an inaccuracy, it enters the test suite permanently. The result is a system that gets measurably, verifiably better — and a dashboard that proves it.

We believe this should be table stakes for any tool that asks for professional trust. If your AI vendor won’t show you their accuracy data, ask yourself what they’re optimising for.

See our accuracy metrics for yourself — and decide whether your current tool can match the transparency.

Sources: 1. Magesh, V. et al. (2024). “Hallucination-Free? Assessing the Reliability of Leading AI Legal Research Tools.” Journal of Empirical Legal Studies. 2. Wan, A. et al. (2025). “The 2025 Foundation Model Transparency Index.” Stanford CRFM. 3. Vals AI (2025). “Vals Legal AI Report — Legal Research.” VLAIR October 2025. 4. U.S. Securities and Exchange Commission (2024). “SEC Charges Two Investment Advisers with Making False and Misleading Statements About Their Use of Artificial Intelligence.” Press Release 2024-36.